Irony alert: Fabricated references have been discovered in research papers submitted to NeurIPS, the renowned artificial intelligence conference

AI Detection Uncovers Fabricated Citations at Major AI Conference

The AI detection company GPTZero conducted an analysis of all 4,841 papers accepted at the renowned Neural Information Processing Systems (NeurIPS) conference, held recently in San Diego. Their investigation revealed 100 fabricated citations distributed across 51 papers, all of which were verified as false, according to information shared with TechCrunch.

Having a submission accepted by NeurIPS is a significant milestone in the AI research community. Considering the caliber of researchers involved, it might be expected that they would utilize large language models (LLMs) to handle the tedious process of compiling citations.

However, it’s important to put these findings in perspective: 100 confirmed fake citations among 51 papers is a small fraction. Given that each paper typically contains many references, the number of fabricated citations is negligible compared to the total—amounting to a statistical blip.

Additionally, a single incorrect citation does not necessarily undermine the validity of a research paper. As NeurIPS explained to Fortune, which first reported on GPTZero’s findings, “Even if 1.1% of the papers have one or more incorrect references due to the use of LLMs, the content of the papers themselves [is] not necessarily invalidated.”

Nevertheless, the presence of fabricated references is still a concern. NeurIPS emphasizes its commitment to “rigorous scholarly publishing in machine learning and artificial intelligence,” as stated in a recent announcement. Each submission undergoes peer review by multiple experts, who are instructed to identify and report hallucinated content.

Citations serve as a key metric for academic influence, reflecting how widely a researcher’s work is recognized by their peers. When AI-generated references are fabricated, it diminishes the value of this important academic currency.

Given the sheer volume of submissions, it’s understandable that peer reviewers might overlook a handful of AI-generated fake citations. GPTZero acknowledges this challenge, noting that their objective was to provide concrete data on how AI-generated errors can slip through during what they describe as a “submission tsunami” that has overwhelmed the review process at major conferences. Their report even references a May 2025 study, “The AI Conference Peer Review Crisis”, which discusses similar issues at leading events like NeurIPS.

TechCrunch Disrupt 2026: Limited-Time Ticket Offer

Tickets are now available! Save up to $680 while these special rates last, and if you’re among the first 500 registrants, you’ll receive 50% off your +1 pass. TechCrunch Disrupt features over 250 sessions with top executives from Google Cloud, Netflix, Microsoft, Box, a16z, Hugging Face, and more. Network with hundreds of innovative startups and participate in curated sessions designed to spark new ideas and business opportunities.

Location: San Francisco | Dates: October 13-15, 2026

REGISTER NOW

Still, it raises the question: Why didn’t the authors themselves verify the accuracy of the citations generated by LLMs? After all, they should be familiar with the sources they referenced in their own work.

Ultimately, this situation highlights a striking irony: If even the world’s foremost AI researchers, whose reputations are on the line, cannot guarantee the precision of their LLM-generated citations, what implications does this have for everyone else?

Disclaimer: The content of this article solely reflects the author's opinion and does not represent the platform in any capacity. This article is not intended to serve as a reference for making investment decisions.

You may also like

Crypto Market Downturn Shakes Venture Capitalists Following $19 Billion Investment Wave

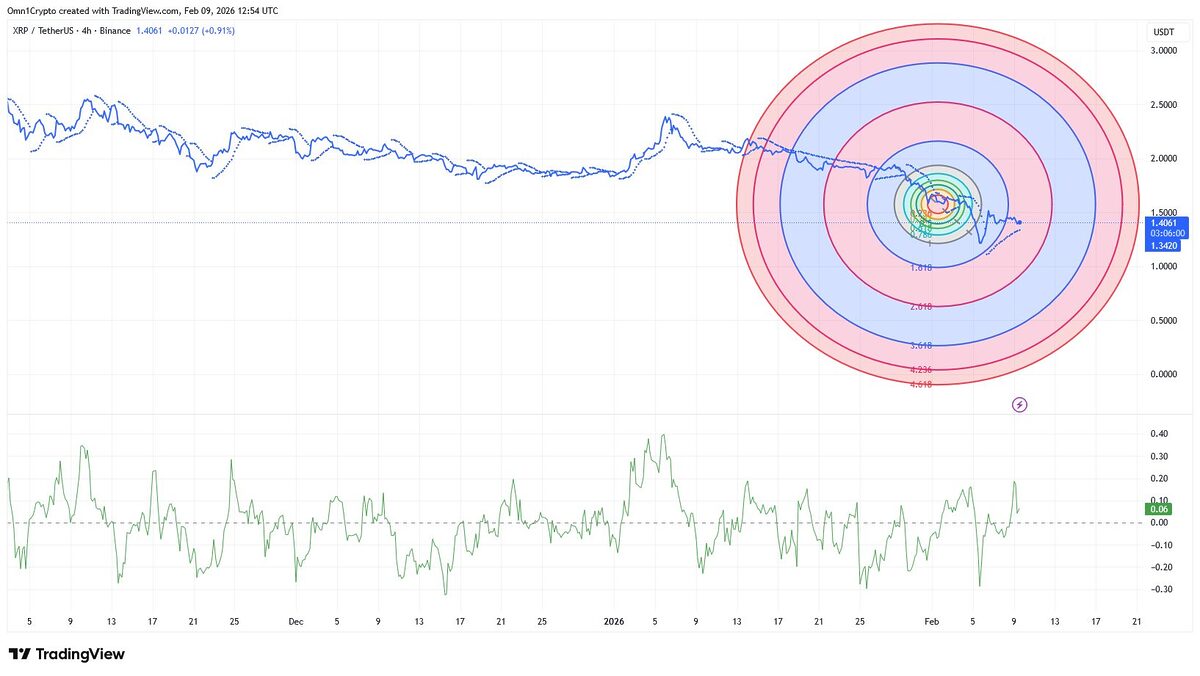

XRP’s Fib Circle Bulls Demand $1.40 Close To Restore $3

BC-US-Foreign Exchange

BC-Sugar Futures